Record of experiments, readings, links, videos and other things that I find on the long road.

Registro de experimentos, lecturas, links, vídeos y otras cosas que voy encontrando en el largo camino.

Friday, August 29, 2025

The Productivity Trap: Perils and Promises of AI Coding

Tuesday, August 23, 2022

Listening to test smells: detecting lack of cohesion and violations of encapsulation

Introduction.

We’d like to show another example of how difficulties found while testing can signal design problems in our code[1].

We believe that a good design is one that supports the evolution of a code base at a sustainable pace and that testability is a requirement for evolvability. This is not something new, we can find this idea in many places.

Michael Feathers says “every time you encounter a testability problem, there is an underlying design problem”[2].

Nat Pryce and Steve Freeman also think that this relationship between testability and good design exists[3]:

“[…] We’ve found that the qualities that make an object easy to test also make our code responsive to change”

and also use it to detect design problems and know what to refactor:

“[…] where we find that our tests are awkward to write, it’s usually because the design of our target code can be improved”

and to improve their TDD practice:

“[…] sensitise yourself to find the rough edges in your tests and use them for rapid feedback about what to do with the code. […] don’t stop at the immediate problem (an ugly test) but look deeper for why I’m in this situation (weakness in the design) and address that.”[4]

This is why they devoted talks, several posts and a chapter of their GOOS book (chapter 20) to listening to the tests[5] and even added it to the TDD cycle steps:

Next we’ll show you an example of how we’ve recently applied this in a client.

The problem.

Recently I was asked to help a pair that was developing a new feature in a legacy code base of one of our clients. They were having problems with the following test[6]:

that was testing the RealTimeGalleryAdsRepository class:

They had managed to test drive the functionality but they were unhappy with the results. The thing that was bothering them was the resetCache method in the RealTimeGalleryAdsRepository class. As its name implies, its intent was to reset the cache. This would have been fine, if this had been a requirement, but that was not the case. The method had been added only for testing purposes.

Looking at the code of RealTimeGalleryAdsRepository you can learn why.

The cachedSearchResult field is static and that was breaking the isolation between tests.

Even though they were using different instances of RealTimeGalleryAdsRepository in each test, they were sharing the same value of the cachedSearchResult field because static state is associated with the class. So a new public method, resetCache, was added to the class only to ensure isolation between different tests.

Adding code to your production code base just to enable unit testing is a unit testing anti-pattern[7], but they didn’t know how to get rid of the resetCache method, and that’s why I was called in to help.

Let’s examine the tests in RealTimeGalleryAdsRepositoryTests to see if they can point to more fundamental design problems.

Another thing we can notice is that the tests can be divided in to sets that are testing two very different behaviours:

-

One set of tests, comprised of

maps_all_ads_with_photo_to_gallery_ads,ignore_ads_with_no_photos_in_gallery_adsandwhen_no_ads_are_found_there_are_no_gallery_ads, is testing the code that obtains the list of gallery ads; -

whereas, the other set, comprised of

when_cache_has_not_expired_the_cached_values_are_usedandwhen_cache_expires_new_values_are_retrievedis testing the life and expiration of some cached values.

This lack of focus was a hint that the production class might lack cohesion, i.e., it might have several responsibilities.

It turns out that there was another code smell that confirmed our suspicion. Notice the boolean parameter useCache in RealTimeGalleryAdsRepository constructor.

That was a clear example of a flag argument[8]. useCache was making the class behave differently depending on its value:

- It cached the list of gallery ads when

useCachewas true. - It did not cache them when

useCachewas false.

After seeing all this, I told the pair that the real problem was the lack of cohesion and that we’d have to go more object-oriented in order to avoid it. After that refactoring the need for the resetCache would disappear.

Going more OO to fix the lack of cohesion.

To strengthen cohesion we need to separate concerns. Let’s see the problem from the point of view of the client of the RealTimeGalleryAdsRepository class, (this point of view is generally very useful because the test is also a client of the tested class) and think about what it would want from the RealTimeGalleryAdsRepository. It would be something like “obtain the gallery ads for me”, that would be the responsibility of the RealTimeGalleryAdsRepository, and that’s what the GalleryAdsRepository represents.

Notice that to satisfy that responsibility we do not need to use a cache, only get some ads from the AdsRepository and map them (the original functionality also included some enrichments using data from other sources but we remove them from the example for the sake of simplicity). Caching is an optimization that we might do or not, it’s a refinement or embellishment to how we satisfy the responsibility but it’s not necessary to satisfy it. In this case, caching changes the “how” but not the “what”.

This matches very well with the Decorator design pattern because this pattern “comes into play when there are a variety of optional functions that can precede or follow another function that is always executed”[9]. Using it would allow us to attach additional behaviour (caching) to the basic required behaviour that satisfied the role that the client needs (“obtain the gallery ads for me”). This way instead of having a flag parameter (like useCache in the original code) to control whether we cache or not, we might add caching by composing objects that implement the GalleryAdsRepository. One of them, RealTimeGalleryAdsRepository, would be in charge of getting ads from the AdsRepository and mapping them to gallery ads; and the other one, CachedGalleryAdsRepository, would cache the gallery ads.

So we moved the responsibility of caching the ads to the CachedGalleryAdsRepository class which decorated the RealTimeGalleryAdsRepository class.

This is the code of the CachedGalleryAdsRepository class:

and these are its tests:

Notice how we found here again the two tests that were previously testing the life and expiration of the cached values in the test of the original RealTimeGalleryAdsRepository: when_cache_has_not_expired_the_cached_values_are_used and when_cache_expires_new_values_are_retrieved.

Furthermore, looking at them more closely, we can see how, in this new design, those tests are also simpler because they don’t know anything about the inner details

of RealTimeGalleryAdsRepository. They only know about the logic related to the life and expiration of the cached values and that when the cache is refreshed they call a collaborator that implements the GalleryAdsRepository interface, this means that now we’re caching gallery ads instead of an instance of the SearchResult and we don’t know anything about the AdsRepository.

On a side note, we also improved the code by using the Duration value object from java.time to remove the primitive obsession smell caused by using a long to represent milliseconds.

Another very important improvement is that we don’t need the static field anymore.

And what about RealTimeGalleryAdsRepository?

If we have a look at its new code, we can notice that its only concern is how to obtain the list of gallery ads and mapping them from the result of its collaborator AdsRepository, and it does not know anything about caching values. So the new design is more cohesive than the original one.

Notice how we removed both the resetCache method that was before polluting its interface only for testing purposes, and the flag argument, useCache, in the constructor.

We also reduced its number of collaborators because there’s no need for a Clock anymore. That collaborator was needed for a different concern that is now taken care of in the decorator CachedGalleryAdsRepository.

These design improvements are reflected in its new tests. They are now more focused, and can only fail if the obtention of the gallery ads breaks. Having only one reason to fail comes from testing a more cohesive unit with only one reason to change. Notice how these tests coincide with the subset of tests concerned with testing the same behaviour in the original tests of RealTimeGalleryAdsRepository:

Persisting the cached values between calls.

You might be asking yourselves, how are we going to ensure that the cached values persist between calls now that we don’t have a static field anymore.

Well, the answer is that we don’t need to keep a static field in our classes for that. The only thing we need is that the composition of CachedGalleryAdsRepository and RealTimeGalleryAdsRepository is created only once, and that we use that single instance for the lifetime of the application. That is a concern that we can address using a different mechanism.

We usually find in legacy code bases that this need to create something only once, and use that single instance for the lifetime of the application is met using the Singleton design pattern described in the design patterns book. The Singleton design pattern intent is to “ensure that only one instance of the singleton class ever exists; and provide global access to that instance”. The second part of that intent, “providing global access”, is problematic because it introduces global state into the application. Using global state creates high coupling (in the form of hidden dependencies and possible actions at a distance) that drastically reduces testability.

Instead we used the singleton pattern[10]. Notice the lowercase letter. The lowercase ’s’ singleton avoids those testability problems because its intent is only to “ensure that only one instance of some class ever exists because its new operator is called only once”. The problematic global access part gets removed from the intent. This is done by avoiding mixing object instantiation with business logic by using separated factories that know how to create and wire up all the dependencies using dependency injection.

We might create this singleton, for instance, by using a dependency injection framework like Guice and its @Singleton annotation.

In this case we coded it ourselves:

Notice the factory method that returns a unique instance of the GalleryAdsRepository interface that caches values. This factory method is never used by business logic, it’s only used by instantiation logic in factories that know how to create and wire up all the dependencies using dependency injection. This doesn’t introduce testability problems because the unique instance will be injected through constructors by factories wherever is needed.

Conclusions.

We show a recent example we found working for a client that illustrates how testability problems may usually point, if we listen to them, to the detection of underlying design problems. In this case the problems in the test were pointing to a lack of cohesion in the production code that was being tested. The original class had too many responsibilities.

We refactored the production code to separate concerns by going more OO applying the decorator design pattern. The result was more cohesive production classes that led to more focused tests, and removed the design problems we had detected in the original design.

Acknowledgements.

I’d like to thank my Codesai colleagues for reading the initial drafts and giving me feedback.

Notes.

[1] We showed another example of this relationship between poor testability and design problems in a previous post: An example of listening to the tests to improve a design.

[2] Listen to his great talk about this relationship: The Deep Synergy Between Testability and Good Design

[3] This is the complete paragraph from chapter 20, Listening to the tests, of the GOOS book: “Sometimes we find it difficult to write a test for some functionality we want to add to our code. In our experience, this usually means that our design can be improved — perhaps the class is too tightly coupled to its environment or does not have clear responsibilities. When this happens, we first check whether it’s an opportunity to improve our code, before working around the design by making the test more complicated or using more sophisticated tools. We’ve found that the qualities that make an object easy to test also make our code responsive to change.”

[4] This quote is from their post Synaesthesia: Listening to Test Smells.

[5] Have a look at this interesting series of posts about listening to the tests by Steve Freeman. It’s a raw version of the content that you’ll find in chapter 20, Listening to the tests, of their book.

[6] We have simplified the client’s code to remove some distracting details and try to highlight its key problems.

[7] Vladimir Khorikov calls this unit testing anti-pattern Code pollution.

[8] A flag Argument is a kind of argument that is telling a function or a class to behave in a different way depending on its value. This might be a signal of poor cohesion in the function or class.

[9] Have a look at the discussion in the chapter devoted to the Decorator design pattern in the great book Design Patterns Explained: A New Perspective on Object-Oriented Design.

[10] Miško Hevery talks about the singleton pattern with lowercase ‘s’ in its talk Global State and Singletons at 10:20: “Singleton with capital ’S’. Refers to the design pattern where the Singleton has a private constructor and has a global instance variable. Lowercase ’s’ singleton means I only have a single instance of something because I only called the new operator once.”

References.

- Growing Object-Oriented Software, Guided by Tests, Steve Freeman, Nat Pryce

- The Deep Synergy Between Testability and Good Design, Michael Feathers

- Design Patterns Explained: A New Perspective on Object-Oriented Design, Alan Shalloway, James R. Trott

- Design Patterns: Elements of Reusable Object-Oriented Software, Erich Gamma, Ralph Johnson, John Vlissides, Richard Helm

- Clean Code Talks - Global State and Singletons , Miško Hevery

- Why Singletons Are Controversial

- Flag Argument, Martin Fowler

- An example of listening to the tests to improve a design, Manuel Rivero

- Code pollution, Vladimir Khorikov

- Guice

- Guice Scopes

Saturday, January 23, 2021

Solving the Beverages Prices Refactoring kata (2): limiting the options on the menu

Introduction.

This is the second and last post in a series of posts showing a possible solution to the Beverages Prices Refactoring kata that I recently developed with some people from Women Tech Makers Barcelona with whom I’m working through Codesai’s Practice Program twice a month.

In the previous post we introduced a design based on composition that fixed the Combinatorial Explosion code smell and produced a flexible solution applying the decorator design pattern. There was a potential problem in that solution because the client code, the code that needed to find out the price of the beverages, knew[1] too much about how to create and compose beverages and supplements.

Have a look, for instance, at the following line new WithCream(new WithMilk(new Coffee())). It knows about three classes and how they are being composed. In the case of this kata, that might not be a big problem, since the client code is only comprised of a few tests, but, in a larger code base, this problem might spread across numerous classes generating a code smell known as Creation Sprawl[2]

In this post, we’ll try to reduce client knowledge of concrete component and decorator classes and their composition by encapsulating all the creational knowledge behind a nice, readable interface that we’ll keep all the complexity of combining the supplements (decorators) and beverages (components) hidden from the client code.

Another more subtle problem with this design based on composition has to do with something that we have lost: the fact that not all combinations of beverages and supplements were allowed on the menu. That knowledge was implicitly encoded in the initial inheritance hierarchy, and disappeared with it. In the current design we can dynamically create any combination of beverages and supplements, including those that were not included in the original menu, like, for instance a tea with cinnamon, milk and cream (doing new WithCinnamon(new WithCream(new WithMilk(new Tea())))) which you might find delicious :).We’ll also explore possible ways to recover that limitation of options.

We’ll start by examining some creational patterns that are usually applied along with the decorator design pattern.

Would the Factory pattern help?

In order to encapsulate the creational code and hide its details from client code, we might use the factory pattern described by Joshua Kerievsky in his Refactoring to Patterns. A factory is a class that implements one or more Creation Methods. A Creation Method is a static or non-static method the creates and returns an object instance[3].

We might apply the Encapsulate Classes with Factory refactoring[4] to introduce a factory class with an interface which provided a creation method for each entry on the menu, that is, it would have a method for making coffee, another one for making tea, another one for making coffee with milk, and so on, and so forth.

Before starting to refactor, let’s think a bit about the consequences of introducing this pattern to assess if it will leave us in a better design spot or not. At first sight, introducing the factory pattern seems to simplify client code and reduce the overall coupling because it encapsulates all the creational logic hiding the complexity related to composing decorators and components behind its interface which solves the first problem we discussed in the introduction. The second one, limiting the combinations of beverages and supplements to only the ones available on the menu, is solved just by limiting the methods in the interface of the factory.

However, it would also create a maintenance problem somehow similar to the initial combinatorial explosion code smell we were trying to avoid when we decided to introduce the decorator design pattern. As we said, the interface of the factory would have a method for each combination of beverages and supplements available on the menu. This means that to add a new supplement we’d have to multiply the number of Creation methods in the interface of the factory by two. So, we might say that, introducing the factory pattern, we’d get an interface suffering from a combinatorial explosion of methods[5].

Knowing that, we might conclude that a solution using the factory pattern would be interesting only when having a small number of options or if we didn’t expect the number of supplements to grow. As we said in the previous post, we think it likely that we’ll be required to add new supplements so we prefer a design that is easy to evolve along the axis of change of adding new supplements[6]. This means the factory pattern is not the way to go for us this time because it’s not flexible enough for our current needs. We’ll have to explore more flexible alternatives[7].

Let’s try using the Builder design pattern.

The builder design pattern is often used for constructing complex and/or composite objects[8]. Using it we might create a nice readable interface to compose the beverages and supplements bit by bit. Like the factory pattern, a builder would encapsulate the complexity of combining decorators from the client code. Unlike the factory pattern, a builder allows to construct the composite following a varying process. It’s this last characteristic that will avoid the combinatorial explosion of methods that made us discard the factory pattern.

In this case you can introduce the builder by applying the Encapsulate Composite with Builder. Let’s have a look at how we implemented it:

Notice how we keep the state of the partially composed object and apply the decorations incrementally until it’s returned by the make method. Notice also how the beverage is the initial state in the process of creating the composite object.

These are the tests after introducing the builder design pattern:

Notice the fluent interface that we decided for the builder. Although a fluent interface is not a requirement to write a builder, we think it reads nice.

As we said before, using a builder does not suffer from the combinatorial explosion of methods that the factory pattern did. The builder design pattern is more flexible than the factory pattern which makes it more suitable for composing components and decorators.

Still, our success is only partial because the builder can create any combination of beverages and supplements. A drawback of using a builder instead of a factory is usually that clients require to have more domain knowledge. In this case, the current solution forces the client code to hold a bit of domain knowledge: it knows which combinations of beverages and supplements are available on the menu.

We’ll fix this last problem in the next section.

A hybrid solution combining factory and builder patterns.

Let’s try to limit the possible combinations of beverages and supplements to the options on the menu by combining the creation methods of the factory pattern and the builder design pattern.

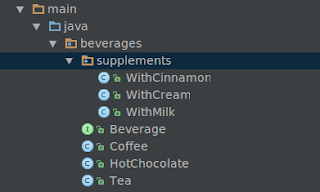

To do so, we added to BeverageMachine the creation methods, coffee, tea and hotChocolate, that create different builders for each type of beverage: CoffeeBuilder, TeaBuilderand HotChocolateBuilder, respectively. Each of the builders has only the public methods to select the supplements which are possible on the menu for a given type of beverage.

Notice that we chose to write the builders as inner classes of the BeverageMachine class. They could have been independent classes, but we prefer inner classes because the builders are only used by BeverageMachine and this way they don’t appear anywhere else.

This is the first design that solves the problem of limiting the possible combinations of beverages and supplements to only the options on the menu. It still encapsulates the creational logic and still reads well. In fact the tests haven’t changed at all because BeverageMachine’s public interface is exactly the same.

However, the new builders present duplication: the code related to supplements that can be used with different beverages and the code in the make method.

What is different for the clients that call the coffee method and the clients that call the tea or hotChocolate methods are the public methods they can use on each builder, that is, their interfaces. When we had only one builder, we had an interface with methods that were not interesting for some of its clients.

By having three builders we segregated the interfaces so that no client was forced to depend on methods it does not use[9]. However we didn’t need to introduce classes to segregate the interfaces, we could have just used, well, interfaces. As we’ll see in the next section using interfaces would have avoided the duplication in the implementation of the builders.

Segregating interfaces better by using interfaces.

As we said, instead of directly using three different builder classes, it’s better to use three interfaces, one for each kind of builder. That would also comply with the Interface Segregation Principle, but, using the interfaces helps us avoid having duplicated code in the implementation of the builders, because we can write only one builder class, Beverage Machine, that implements the three interfaces.

Notice how, in the creation methods, we feed the base beverage into BeverageMachine through its constructor, and how each of those creation methods return the appropriate interface. Notice also that BeverageMachine’s public interface remains the same, so this refactor won’t change the tests at all. You can check the resulting builder interfaces in Gist: TeaBuilder, HotChocolateBuilder and CoffeeBuilder.

Conclusions.

In this last post of the series dedicated to the Beverages Prices Refactoring kata, we’ve explored different ways to avoid creation sprawl, reduce coupling with client code and reduce implicit creational domain knowledge in client code. In doing so, we have learned about and applied several creational patterns (factory pattern, and builder design pattern), and some related refactorings. We have also used some design principles (such as coupling, open-closed principle or interface segregation principle), and code smells (such as combinatorial explosion or creation sprawl) to judge different solutions and guide our refactorings.

Aknowledgements.

I’d like to thank the WTM study group, and especially Inma Navas for solving this kata with me. Thanks also to my Codesai colleagues and to Inma Navas for reading the initial drafts and giving me feedback and to Amelia Hallsworth for the picture.

Notes.

[1] Knowledge here means coupling or connascence.

[2] Creation Sprawl is a code smell that happens when the knowledge for creating an object is spread out across numerous classes, so that creational responsibilities are placed in classes that should now be playing any role in object creation. This code smell was described by Joshua Kerievsky in his Refactoring to Patterns book.

[3] Don’t confuse the Factory Pattern with design patterns with similar names like Factory method pattern or Abstract factory pattern. These two design patterns are creational patterns described in the Design Patterns: Elements of Reusable Object-Oriented Software book.

A Factory Method is “a non-static method that returns a base class or an interface type and that is implemented in a hierarchy to enable polymorphic creation” whereas an Abstract Factory is “an interface for creating fqamiñlies of related or dependent objects without specifying their concrete classes”.

In the Factory Pattern a Factory is “any class that implements one or more Creation Methods” which are “static or non-static methods that create and return an object instance”. This definition is more general. Every Abstract Factory is a Factory (but not the other way around), and every Factory Method is a Creation Method (but not necessarily the reverse). Creation Method also includes what Martin Fowler called “factory method” in Refactoring (which is not the Factory Method design pattern) and Joshua Bloch called “static factory” (probably a less confusing name than Fowler’s one) in Effective Java.

[4] Presented in the fourth chapter of Refactoring to Patterns that is dedicated to Creational Patterns. [5] If you remember the previous post, before introducing the decorator design pattern, we suffered from a combinatorial explosion of classes (adding a new supplement meant multiplying the number of classes by two). Now, the factory interface (its public methods) would suffer a combinatorial explosion of methods.

[6] In other words: that it’s [protected against that type of variation (https://www.martinfowler.com/ieeeSoftware/protectedVariation.pdf).

[7] This is common when working with creational patterns. All of them encapsulate knowledge about which concrete classes are used and hide how instances of these classes are created and put together, but some are more flexible than others. It’s usual to start using a Factory pattern and evolve toward the other creational patterns as we realize more flexibility is needed.

[8] We have devoted several posts to builders: Remove data structures noise from your tests with builders, Refactoring tests using builder functions in Clojure/ClojureScript, In a small piece of code, The curious case of the negative builder.

[9] Following the Interface Segregation Principle that states that “no client should be forced to depend on methods it does not use”.

References.

Books

-

Design Patterns: Elements of Reusable Object-Oriented Software, Erich Gamma, Ralph Johnson, John Vlissides, Richard Helm

-

Head First Design Patterns, Eric Freeman, Kathy Sierra, Bert Bates, Elisabeth Robson

Articles

-

The Beverages Prices Refactoring kata: a kata to practice refactoring away from an awful application of inheritance, Manuel Rivero

-

Solving the Beverages Prices Refactoring kata (1): composition over inheritance, Manuel Rivero, Manuel Rivero

-

Protected Variation: The Importance of BeingClosed, Craig Larman

Monday, November 30, 2020

Solving the Beverages Prices Refactoring kata (1): composition over inheritance

Introduction.

We are going to show a possible solution to the Beverages Prices Refactoring kata that we developed recently with some people from Women Tech Makers Barcelona with whom I’m doing Codesai’s Practice Program twice a month.

The Beverages Prices Refactoring kata shows an example of inheritance gone astray. The initial code computes the price of the different beverages that are sold in a coffee house. There are some supplements that can be added to those beverages. Each supplement increases the price a bit. Not all combinations of drinks and supplements are possible.

As part of the kata, we are asked to add an optional cinnamon supplement that costs 0.05€ to all our existing catalog of beverages. We are also advised to refactor the initial code a bit before introducing the new feature. Let’s see why.

The initial code.

To get an idea of the kind of problem we are facing, we’ll have a look at the code. There are 8 files: a Beverage interface and 7 classes, one for each type of beverage and one for each allowed combination of beverages and supplements.

A closer look reveals that the initial design uses inheritance and polymorphism to enable the differences in computing the price of each allowed combination of beverage and supplements. This is the inheritance hierarchy:

If that diagram is not enough to scare you, have a quick look at the unit tests of the code:

First, we make the change easy[1].

Given the current design, if we decided to add the new feature straight away, we would end up with 14 classes (2 times the initial number of classes). If you think about it, this would happen for each new supplement we decided to add. It would be the same for each new supplement we were required to add: we would be forced to double the number of classes, that means that to add n supplements more would mean multiplying the initial number of classes by 2n.

This exponential growth in the number of classes is a typical symptom of a code smell called Combinatorial Explosion[2]. In this particular case the problem is caused by using inheritance to represent the pricing of beverages plus supplements.

In order to introduce the new cinnamon supplement, we thought sensible to do a bit of preparatory refactoring first in order to remove the Combinatorial Explosion code smell. The recommended refactoring for this code smell is Replace Inheritance with Delegation which leads to a code that uses composition instead of inheritance to avoid the combinatorial explosion. If all the variants[3] (supplements) keep the same interface, we’d be creating an example of the decorator design pattern[4].

The decorator pattern provides an alternative to subclassing for extending behavior. It involves a set of decorator classes that wrap concrete components and keep the same interface that the concrete components. A decorator changes the behavior of a wrapped component by adding new functionality before and/or after delegating to the concrete component.

Applying the decorator pattern design to compute the pricing of beverages plus supplements, the beverages would correspond to the concrete components, tea, coffee and hot chocolate; whereas the supplements, milk and cream would correspond to the decorators.

We can obtain the behavior for a given combination of supplements and beverage by composing supplements (decorators) with a beverage (base component).

For instance, if we had the following WithMilk decorator for the milk supplement pricing,

we would compose it with a Tea instance to create the behavior that computes the price of a tea with milk:

A nice thing about decorators is that, since they have the same interface as the component they wrap, they are transparent for the client code[6] which never has to know that it’s dealing with a decorator. This allows us to pass them around in place of the wrapped object which makes it possible to compose behaviors using as many decorators as we like. The following example shows how to compose the behavior for computing the price of a coffee with milk and cream[7].

After applying the Replace Inheritance with Delegation refactoring we get to a design that uses composition instead of inheritance to create all the combinations of supplements and beverages. This fixes the Combinatorial Explosion code smell.

You can have a look at the rest of the test after this refactoring in this gist.

Then, we make the easy change.

Once we had the new design based in composition instead of inheritance in place, adding the requested feature is as easy as creating a new decorator that represents the cinnamon supplement pricing:

Remember that using the initial design adding this feature would have involved multiplying by two the number of classes.

What have we gained and lost with this refactoring?

After the refactoring we have a new design that uses the decorator design pattern. This is a flexible alternative design to subclassing for extending behavior that allows us to add behavior dynamically to the objects wrapped by the decorators.

Thanks to this runtime flexibility we managed to fix the Combinatorial Explosion code smell and that made it easier to add the new feature. Now, instead of multiplying the number of cases by two, adding a new supplement only involves adding one new decorator class that represents the new supplement pricing. This new design makes the client code open-closed to the axis of change of adding new supplements.

On the flip side, we have introduced some complexity[8] related to creating the different compositions of decorators and components. At the moment this complexity is being managed by the client code (notice the chains of news in the tests snippets above).

There’s also something else that we have lost in the process. In the initial design only some combinations of beverages and supplements were allowed. This fact was encoded in the initial inheritance hierarchy. Now with our decorators we can dynamically add any possible combination of beverages and supplements.

All in all, we think that the refactoring leaves us in a better spot because we’ll be likely required to add new supplements, and there are usual improvements we can make to the design to isolate the client code from the kind of complexity we have introduced.

Conclusion.

We have shown an example of preparatory refactoring to make easier the addition of a new feature, and learned about the Combinatorial Explosion code smell and how to fix it using the decorator design pattern to get a new design in which we have protected the client code[9] against variations involving new supplements.

In a future post we will show how to encapsulate the creation of the different compositions of decorators and components using builders and/or factories to hide that complexity from client code, and show how we can limit again the allowed combinations that are part of the menu.

Aknowledgements.

I’d like to thank the WTM study group, and especially Inma Navas for solving this kata with me.

Thanks to my Codesai colleagues and Inma Navas for reading the initial drafts and giving me feedback and to Lisa Fotios for her picture.

Notes.

[1] This and the next header come from Kent Beck’s quote:

“For each desired change, make the change easy (warning: this may be hard), then make the easy change”

[2] You can find this code smell described in Bill Wake’s wonderful Refactoring Workbook.

[3] In this context variant means a variation in behavior. For instance, each derived class in a hierarchy is a variant of the base class.

[4] Have a look at the chapter devoted to the decorator design pattern in the great Head First Design Patterns. It’s the most didactic and fun explanation of the pattern I’ve ever found. This kata is heavily inspired in the example used in that chapter to explain the pattern.

[5] We could have also solved this problem composing functions instead of objects, but we wanted to practice with objects in this solution of the kata. That might be an interesting exercise for another practice session.

[6] In this context client code means code that uses objects implementing the interface that both the components and decorators implement, which in the kata would correspond to the Beverage interface.

[7] Also known as a “cortado leche y leche” in Gran Canaria :)

[8] Complexity is most often the price we pay for flexibility. That’s why we should always assess if the gains are worth the price.

[9] Protected variations is another way to refer to the open-closed principle. I particularly prefer that way to refer to this design principle because I think it is expressed in a way that relates less to object orientation. Have a look at Craig Larman’s great article about it: Protected Variation: The Importance of Being Closed

References.

- The Beverages Prices Refactoring kata: a kata to practice refactoring away from an awful application of inheritance

- Replace Inheritance with Delegation

- Protected Variation: The Importance of Being Closed, Craig Larman

- Open-closed Principle

- Refactoring Workbook, William C. Wake

- Head First Design Patterns, Eric Freeman, Kathy Sierra, Bert Bates, Elisabeth Robson

Sunday, October 18, 2020

Sleeping is not the best option

Introduction.

Some time ago we were developing a code that stored some data with a given TTL. We wanted to check not only that the data was stored correctly but also that it expired after the given TTL. This is an example of testing asynchronous code.

When testing asynchronous code we need to carefully coordinate the test with the system it is testing to avoid running the assertion before the tested action has completed[1]. For example, the following test will always fail because the assertion in line 30 is checked before the data has expired:

In this case the test always fails but in other cases it might be worse, failing intermittently when the system is working, or passing when the system is broken. We need to make the test wait to give the action we are testing time to complete successfully and fail if this doesn’t happen within a given timeout period.

Sleeping is not the best option.

This is an improved version of the previous test in which we are making the test code wait before the checking that the data has expired to give the code under test time to run:

The problem with the simple sleeping approach is that in some runs the timeout might be enough for the data to expire but in other runs it might not, so the test will fail intermittently; it becomes a flickering test. Flickering tests are confusing because when they fail, we don’t know whether it’s due to a real bug, or it is just a false positive. If the failure is relatively common, the team might start ignoring those tests which can mask real defects and completely destroy the value of having automated tests.

Since the intermittent failures happen because the timeout is too close to the time the behavior we are testing takes to run, many teams decide to reduce the frequency of those failures by increasing the time each test sleeps before checking that the action under test was successful. This is not practical because it soon leads to test suites that take too long to run.

Alternative approaches.

If we are able to detect success sooner, succeeding tests will provide rapid feedback, and we only have to wait for failing tests to timeout. This is a much better approach than waiting the same amount of time for each test regardless it fails or succeeds.

There are two main strategies to detect success sooner: capturing notifications[2] and polling for changes.

In the case we are using as an example, polling was the only option because redis didn’t send any monitoring events we could listen to.

Polling for changes.

To detect success as soon as possible, we’re going to probe several times separated by a time interval which will be shorter than the previous timeout. If the result of a probe is what we expect the test pass, if the result we expect is not there yet, we sleep a bit and retry. If after several retries, the expected value is not there, the test will fail.

Have a look at the checkThatDataHasExpired method in the following code:

By polling for changes we avoid always waiting the maximum amount of time. Only in the worst case scenario, when consuming all the retries without detecting success, we’ll wait as much as in the just sleeping approach that used a fixed timeout.

Extracting a helper.

Scattering ad hoc low level code that polls and probes like the one in checkThatDataHasExpired throughout your tests not only make them difficult to understand, but also is a very bad case of duplication. So we extracted it to a helper so we could reuse it in different situations.

What varies from one application of this approach to another are the probe, the check, the number of probes before failing and the time between probes, everything else we extracted to the following helper[3]:

This is how the previous tests would look after using the helper:

Notice that we’re passing the probe, the check, the number of probes and the sleep time between probes to the AsyncTestHelpers::assertWithPolling function.

Conclusions.

We showed an example in Php of an approach to test asynchronous code described by Steve Freeman and Nat Pryce in their Growing Object-Oriented Software, Guided by Tests book. This approach avoids flickering test and produces much faster test suites than using a fixed timeout. We also showed how we abstracted this approach by extracting a helper function that we are reusing in our code.

We hope you’ve found this approach interesting. If you want to learn more about this and several other techniques to effectively test asynchronous code, have a look at the wonderful Growing Object-Oriented Software, Guided by Tests book[4].

Acknowledgements.

Thanks to my Codesai colleagues for reading the initial drafts and giving me feedback and to Chrisy Totty for the lovely cat picture.

Notes.

Some time ago we developed some helpers using the capturing notifications strategy to test asynchronous ClojureScript code that was using core.async channels. Have a look at, for instance, the

expect-async-message assertion helper in which we use core.async/alts! and core.async/timeout to implement this behaviour. The core.async/alts! function selects the first channel that responds. If that channel is the one the test code was observing we assert that the received message is what we expected. If the channel that responds first is the one generated by core.async/timeout we fail the test. We mentioned these async-test-tools in previous post: Testing Om components with cljs-react-test.

References.

-

Growing Object-Oriented Software, Guided by Tests, Steve Freeman and Nat Pryce (chapter 27: Testing Asynchronous Code)

Saturday, April 25, 2020

Friday, April 24, 2020

Interesting Talk: "How to rewrite, a bit at a time"

Thursday, June 27, 2019

An example of listening to the tests to improve a design

Introduction.

Recently in the B2B team at LIFULL Connect, we improved the validation of the clicks our API receive using a service that detects whether the clicks were made by a bot or a human being.

So we used TDD to add this new validation to the previously existing validation that checked if the click contained all mandatory information. This was the resulting code:

and these were its tests:

The problem with these tests is that they know too much. They are coupled to many implementation details. They not only know the concrete validations we apply to a click and the order in which they are applied, but also details about what gets logged when a concrete validations fails. There are multiple axes of change that will make these tests break. The tests are fragile against those axes of changes and, as such, they might become a future maintenance burden, in case changes along those axes are required.

So what might we do about that fragility when any of those changes come?

Improving the design to have less fragile tests.

As we said before the test fragility was hinting to a design problem in the ClickValidation code. The problem is that it’s concentrating too much knowledge because it’s written in a procedural style in which it is querying every concrete validation to know if the click is ok, combining the result of all those validations and knowing when to log validation failures. Those are too many responsibilities for ClickValidation and is the cause of the fragility in the tests.

We can revert this situation by changing to a more object-oriented implementation in which responsibilities are better distributed. Let’s see how that design might look:

1. Removing knowledge about logging.

After this change, ClickValidation will know nothing about looging. We can use the same technique to avoid knowing about any similar side-effects which concrete validations might produce.

First we create an interface, ClickValidator, that any object that validates clicks should implement:

Next we create a new class NoBotClickValidator that wraps the BotClickDetector and adapts[1] it to implement the ClickValidator interface. This wrapper also enrichs BotClickDetector’s’ behavior by taking charge of logging in case the click is not valid.

These are the tests of NoBotClickValidator that takes care of the delegation to BotClickDetector and the logging:

If we used NoBotClickValidator in ClickValidation, we’d remove all knowledge about logging from ClickValidation.

Of course, that knowledge would also disappear from its tests. By using the ClickValidator interface for all concrete validations and wrapping validations with side-effects like logging, we’d make ClickValidation tests robust to changes involving some of the possible axis of change that were making them fragile:

- Changing the interface of any of the individual validations.

- Adding side-effects to any of the validations.

2. Another improvement: don't use test doubles when it's not worth it[2].

There’s another way to make ClickValidation tests less fragile.

If we have a look at ClickParamsValidator and BotClickDetector (I can’t show their code here for security reasons), they have very different natures. ClickParamsValidator has no collaborators, no state and a very simple logic, whereas BotClickDetector has several collaborators, state and a complicated validation logic.

Stubbing ClickParamsValidator in ClickValidation tests is not giving us any benefit over directly using it, and it’s producing coupling between the tests and the code.

On the contrary, stubbing NoBotClickValidator (which wraps BotClickDetector) is really worth it, because, even though it also produces coupling, it makes ClickValidation tests much simpler.

Using a test double when you’d be better of using the real collaborator is a weakness in the design of the test, rather than in the code to be tested.

These would be the tests for the ClickValidation code with no logging knowledge, after applying this idea of not using test doubles for everything:

Notice how the tests now use the real ClickParamsValidator and how that reduces the coupling with the production code and makes the set up simpler.

3. Removing knowledge about the concrete sequence of validations.

After this change, the new design will compose validations in a way that will result in ClickValidation being only in charge of combining the result of a given sequence of validations.

First we refactor the click validation so that the validation is now done by composing several validations:

The new validation code has several advantages over the previous one:

- It does not depend on concrete validations any more

- It does not depend on the order in which the validations are made.

It has only one responsibility: it applies several validations in sequence, if all of them are valid, it will accept the click, but if any given validation fails, it will reject the click and stop applying the rest of the validations. If you think about it, it’s behaving like an and operator.

We may write these tests for this new version of the click validation:

These tests are robust to the changes making the initial version of the tests fragile that we described in the introduction:

- Changing the interface of any of the individual validations.

- Adding side-effects to any of the validations.

- Adding more validations.

- Changing the order of the validation.

However, this version of ClickValidationTest is so general and flexible, that using it, our tests would stop knowing which validations, and in which order, are applied to the clicks[3]. That sequence of validations is a business rule and, as such, we should protect it. We might keep this version of ClickValidationTest only if we had some outer test protecting the desired sequence of validations.

This other version of the tests, on the other hand, keeps protecting the business rule:

Notice how this version of the tests keeps in its setup the knowledge of which sequence of validations should be used, and how it only uses test doubles for NoBotClickValidator.

4. Avoid exposing internals.

The fact that we’re injecting into ClickValidation an object, ClickParamsValidator, that we realized we didn’t need to double, it’s a smell which points to the possibility that ClickParamsValidator is an internal detail of ClickValidation instead of its peer. So by injecting it, we’re coupling ClickValidation users, or at least the code that creates it, to an internal detail of ClickValidation: ClickParamsValidator.

A better version of this code would hide ClickParamsValidator by instantiating it inside ClickValidation’s constructor:

With this change ClickValidation recovers the knowledge of the sequence of validations which in the previous section was located in the code that created ClickValidation.

There are some stereotypes that can help us identify real collaborators (peers)[4]:

- Dependencies: services that the object needs from its environment so that it can fulfill its responsibilities.

- Notifications: other parts of the system that need to know when the object changes state or performs an action.

- Adjustments or Policies: objects that tweak or adapt the object’s behaviour to the needs of the system.

Following these stereotypes, we could argue that NoBotClickValidator is also an internal detail of ClickValidation and shouldn’t be exposed to the tests by injecting it. Hiding it we’d arrive to this other version of ClickValidation:

in which we have to inject the real dependencies of the validation, and no internal details are exposed to the client code. This version is very similar to the one we’d have got using tests doubles only for infrastructure.

The advantage of this version would be that its tests would know the least possible about ClickValidation. They’d know only ClickValidation’s boundaries marked by the ports injected through its constructor, and ClickValidation`’s public API. That will reduce the coupling between tests and production code, and facilitate refactorings of the validation logic.

The drawback is that the combinations of test cases in ClickValidationTest would grow, and may of those test cases would talk about situations happening in the validation boundaries that might be far apart from ClickValidation’s callers. This might make the tests hard to understand, specially if some of the validations have a complex logic. When this problem gets severe, we may reduce it by injecting and use test doubles for very complex validators, this is a trade-off in which we decide to accept some coupling with the internal of ClickValidation in order to improve the understandability of its tests. In our case, the bot detection was one of those complex components, so we decided to test it separately, and inject it in ClickValidation so we could double it in ClickValidation’s tests, which is why we kept the penultimate version of ClickValidation in which we were injecting the click-not-made-by-a-bot validation.

Conclusion.

In this post, we tried to play with an example to show how listening to the tests[5] we can detect possible design problems, and how we can use that feedback to improve both the design of our code and its tests, when changes that expose those design problems are required.

In this case, the initial tests were fragile because the production code was procedural and had too many responsibilities. The tests were fragile also because they were using test doubles for some collaborators when it wasn’t worth to do it.

Then we showed how refactoring the original code to be more object-oriented and separating better its responsibilities, could remove some of the fragility of the tests. We also showed how reducing the use of test doubles only to those collaborators that really needs to be substituted can improve the tests and reduce their fragility. Finally, we showed how we can go too far in trying to make the tests flexible and robust, and accidentally stop protecting a business rule, and how a less flexible version of the tests can fix that.

When faced with fragility due to coupling between tests and the code being tested caused by using test doubles, it’s easy and very usual to “blame the mocks”, but, we believe, it would be more productive to listen to the tests to notice which improvements in our design they are suggesting. If we act on this feedback the tests doubles give us about our design, we can use tests doubles in our advantage, as powerful feedback tools[6], that help us improve our designs, instead of just suffering and blaming them.

Acknowledgements.

Many thanks to my Codesai colleagues Alfredo Casado, Fran Reyes, Antonio de la Torre and Manuel Tordesillas, and to my Aprendices colleagues Paulo Clavijo, Álvaro García and Fermin Saez for their feedback on the post, and to my colleagues at LIFULL Connect for all the mobs we enjoy together.

Footnotes:

I think that if your design follows a different OO style, it might be preferable to stick to a classical TDD style which nearly limits the use of test doubles only to infrastructure and undesirable side-effects.

Monday, June 24, 2019

Interesting Talk: "Solving Problems the Clojure Way"

Saturday, May 25, 2019

The curious case of the negative builder

Recently, one of the teams I’m coaching at my current client, asked me to help them with a problem, they were experiencing while using TDD to add and validate new mandatory query string parameters[1]. This is a shortened version (validating fewer parameters than the original code) of the tests they were having problems with:

and this is the implementation of the QueryStringBuilder used in this test:

which is a builder with a fluid interface that follows to the letter a typical implementation of the pattern. There are even libraries that help you to automatically create this kind of builders[2].

However, in this particular case, implementing the QueryStringBuilder following this typical recipe causes a lot of problems. Looking at the test code, you can see why.

To add a new mandatory parameter, for example sourceId, following the TDD cycle, you would first write a new test asserting that a query string lacking the parameter should not be valid.

So far so good, the problem comes when you change the production code to make this test pass, in that momento you’ll see how the first test that was asserting that a query string with all the parameters was valid starts to fail (if you check the query string of that tests and the one in the new test, you’ll see how they are the same). Not only that, all the previous tests that were asserting that a query string was invalid because a given parameter was lacking won’t be “true” anymore because after this change they could fail for more than one reason.

So to carry on, you’d need to fix the first test and also change all the previous ones so that they fail again only for the reason described in the test name:

That’s a lot of rework on the tests only for adding a new parameter, and the team had to add many more. The typical implementation of a builder was not helping them.

The problem we’ve just explained can be avoided by chosing a default value that creates a valid query string and what I call “a negative builder”, a builder with methods that remove parts instead of adding them. So we refactored together the initial version of the tests and the builder, until we got to this new version of the tests:

which used a “negative” QueryStringBuilder:

After this refactoring, to add the sourceId we wrote this test instead:

which only carries with it updating the valid method in QueryStringBuilder and adding a method that removes the sourceId parameter from a valid query string.

Now when we changed the code to make this last test pass, no other test failed or started to have descriptions that were not true anymore.

Leaving behind the typical recipe and adapting the idea of the builder pattern to the context of the problem at hand, led us to a curious implementation, a “negative builder”, that made the tests easier to maintain and improved our TDD flow.

Acknowledgements.

Many thanks to my Codesai colleagues Antonio de la Torre and Fran Reyes, and to all the colleagues of the Prime Services Team at LIFULL Connect for all the mobs we enjoy together.

Footnotes:

Sunday, April 7, 2019

The Beverages Prices Refactoring kata: a kata to practice refactoring away from an awful application of inheritance.

I created the Beverages Prices Refactoring kata for the Deliberate Practice Program I’m running at Lifull Connect offices in Barcelona (previously Trovit). Its goal is to practice refactoring away from a bad usage of inheritance.

The code computes the price of the different beverages that are sold in a coffe house. There are some supplements that can be added to those beverages. Each supplement increases the price a bit. Not all combinations of drinks and supplements are possible.

Just having a quick look at the tests of the initial code would give you an idea of the kind of problems it might have:

If that’s not enough have a look at its inheritance hierarchy:

To make things worse, we are asked to add an optional cinnamon supplement that costs 0.05€ to all our existing catalog of beverages. We think we should refactor this code a bit before introducing the new feature.

We hope you have fun practicing refactoring with this kata.

Tuesday, March 5, 2019

Interesting Talk: "The Art of Refactoring"

Thursday, January 3, 2019

Avoid using subscriptions only as app-state getters

Introduction.

Subscriptions in re-frame or re-om are query functions that extract data from the app-state and provide it to view functions in the right format. When we use subscriptions well, they provide a lot of value[1], because they avoid having to keep derived state the app-state and they dumb down the views, that end up being simple “data in, screen out” functions.

However, things are not that easy. When you start working with subscriptions, it might happen that you end up using them as mere getters of app-state. This is a missed opportunity because using subscriptions in this way, we won’t take advantage of all the value they can provide, and we run the risk of leaking pieces of untested logic into our views.

An example.

We’ll illustrate this problem with a small real example written with re-om subscriptions (in an app using re-frame subscriptions the problem would look similar). Have a look at this piece of view code in which some details have been elided for brevity sake:

this code is using subscriptions written in the horizon.domain.reports.dialogs.edit namespace.

The misuse of subscriptions we’d like to show appears on the following piece of the view:

Notice how the only thing that we need to render this piece of view is next-generation. To compute its value the code is using several subscriptions to get some values from the app-state and binding them to local vars (delay-unit start-at, delay-number and date-modes). Those values are then fed to a couple of private functions also defined in the view (get-next-generation and delay->interval) to obtain the value of next-generation.

This is a bad use of subscriptions. Remember subscriptions are query functions on app-state that, used well, help to make views as dumb (with no logic) as possible. If you push as much logic as possible into subscriptions, you might achieve views that are so dumb you nearly don’t need to test, and decide to limit your unit tests to do only subcutaneous testing of your SPA.

Refactoring: placing the logic in the right place.

We can refactor the code shown above to remove all the leaked logic from the view by writing only one subscription called next-generation which will produce the only information that the view needs. As a result both get-next-generation and delay->interval functions will get pushed into the logic behind the new next-generation subscription and dissappear from the view.

This is the resulting view code after this refactoring:

and this is the resulting pure logic of the new subscription. Notice that, since get-next-generation function wasn’t pure, we had to change it a bit to make it pure:

After this refactoring the view is much dumber. The previously leaked (an untested) logic in the view (the get-next-generation and delay->interval functions) has been removed from it. Now that logic can be easyly tested through the new next-generation subscription. This design is also much better than the previous one because it hides how we obtain the data that the view needs: now both the view and the tests ignore, and so are not coupled, to how the data the view needs is obtained. We might refactor both the app-state and the logic now in get-next-generation and delay->interval functions without affecting the view. This is another example of how what is more stable than how.

Summary

The idea to remember is that subscriptions by themselves don’t make code more testable and maintainable. It’s the way we use subscriptions that produces better code. For that the logic must be in the right place which is not inside the view but behind the subscriptions that provide the data that the view needs. If we keep writing “getter” subscriptions” and placing logic in views, we won’t gain all the advantages the subscriptions concept provides and we’ll write poorly designed views coupled to leaked bunches of (very likely untested) logic.

Acknowledgements.

Many thanks to André Stylianos Ramos and Fran Reyes for giving us great feedback to improve this post and for all the interesting conversations.

Footnotes: